Now how can you make it out that a shopkeeper who is smiling at you is doing so out of politeness or he is about to cheat you. Though we humans sometime do perceive smile correctly but seeing from outside we hardly are able to differentiate between the different nature of smiles. The researchers in MIT have sought a answer to this confusions in the developed technology.

The researchers tried to assume into different kinds of smiles and deconstruct them into notable facial features and then wondered whether its possible to train a computer to recognize some of the smiles automatically.

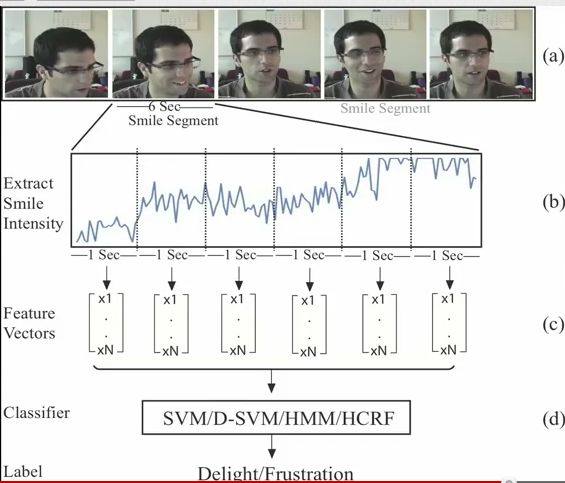

The major bottle neck of this kind of research is that the researchers need to have a lot of samples of spontaneous smiles, so for this work people where bought into the lab and given a long tedious form to fill up specially and intentionally designed to be bugging so regardless of whatever they typed, as soon as they hit the button submit it would clear the form and bring it back to the beginning of the form and it was surprisingly seen that lot of people though extremely frustrated yet they where smiling to cope up with the environment. In the snap shot below you can see two things number one the shown participant has actually action unit (AU)12 also known as lip corner pole raised and also AU6 cheek raiser pulled.

Based on research when you have these two facial muscles evolved you are more likely to be in a happy state however if you follow the patters of signals how it progresses through time instead of just seeing a snap shot it may be able to tell you more about the expression says M.Ehsan Hoque research assistant MIT media lab.

So we have two different kinds of smiles delighted smiles and frustrated smiles. Some computer programs designed and developed with latest research and information can differentiate the different human emotions.For the delighted smiles the computer algorithms performed as good as human however for frustrated smiles human performed below chance whereas algorithms perform more than 90 percent. One possible explanation to this is that we humans usually can assume out and try to interpret an expression whereas computer algorithms can utilize the nitty grity details of a signal that is much more enriching than just assume out and look at them.

One application of the research that is really interesting is to help people with autism to interpret expressions better because often in school during therapy they are told if you see a lip corner pole, the person is more likely to be happy however the work now demonstrates that , it is possible for the people to be smiling in different contextual scenario and the meaning will be completely different. So if you can deconstruct smile into easily practicable features perhaps it could be taught to them and people with autism could be benefited.

Now how can you make it out that a shopkeeper who is smiling at you is doing so out of politeness or he is about to cheat you. Though we humans sometime do perceive smile correctly but seeing from outside we hardly are able to differentiate between the different nature of smiles. The researchers in MIT have sought a answer to this confusions in the developed technology.

The researchers tried to assume into different kinds of smiles and deconstruct them into notable facial features and then wondered whether its possible to train a computer to recognize some of the smiles automatically.

The major bottle neck of this kind of research is that the researchers need to have a lot of samples of spontaneous smiles, so for this work people where bought into the lab and given a long tedious form to fill up specially and intentionally designed to be bugging so regardless of whatever they typed, as soon as they hit the button submit it would clear the form and bring it back to the beginning of the form and it was surprisingly seen that lot of people though extremely frustrated yet they where smiling to cope up with the environment. In the snap shot below you can see two things number one the shown participant has actually action unit (AU)12 also known as lip corner pole raised and also AU6 cheek raiser pulled.

Based on research when you have these two facial muscles evolved you are more likely to be in a happy state however if you follow the patters of signals how it progresses through time instead of just seeing a snap shot it may be able to tell you more about the expression says M.Ehsan Hoque research assistant MIT media lab.

So we have two different kinds of smiles delighted smiles and frustrated smiles. Some computer programs designed and developed with latest research and information can differentiate the different human emotions.For the delighted smiles the computer algorithms performed as good as human however for frustrated smiles human performed below chance whereas algorithms perform more than 90 percent. One possible explanation to this is that we humans usually can assume out and try to interpret an expression whereas computer algorithms can utilize the nitty grity details of a signal that is much more enriching than just assume out and look at them.

One application of the research that is really interesting is to help people with autism to interpret expressions better because often in school during therapy they are told if you see a lip corner pole, the person is more likely to be happy however the work now demonstrates that , it is possible for the people to be smiling in different contextual scenario and the meaning will be completely different. So if you can deconstruct smile into easily practicable features perhaps it could be taught to them and people with autism could be benefited.

No comments:

Post a Comment